Client-side integration means that the client is able to communicate directly with the HPC resource using SSH or a custom communication method.

The mechanism and operations for custom client-side integration are very similar to the ones for custom cluster-side integration. However, the underlying architecture is different. In the cluster-side integration, the customization affects the scripts used for RSM execution on the cluster side. In the client-side integration, only a thin layer of RSM on the client side is involved. The layer provides the APIs for the execution of the custom scripts, which are located on the client machine. It is the responsibility of the custom scripts to handle all aspects of the job execution, including transfer of files to and from the HPC staging directory (if needed).

The RSM installation provides some prototype code for client integration that can be tailored and modified to meet specific customization needs.

When customizing files, you must choose a "keyword" that represents your custom HPC type. This is a short word or phrase that you will append to the file names of your custom files, and use when defining a configuration in RSM to map the configuration to your custom files. The name is arbitrary, but you should make it simple enough to append to file names. For example, if you are creating a customized version of an LSF cluster, your keyword might be "CUS-LSF". The only requirement is that you consistently use the same capitalization in all places where the keyword is referenced.

For client-side integration, you will be using the local client machine to perform the following steps:

Create a copy of the HPC commands file that most closely matches your custom cluster type, and replace the keyword with your custom cluster type “keyword” (for example, hpc_commands_<keyword>.xml).

Add an entry to the job configuration file that associates your custom cluster “keyword” with the cluster-specific hpc_commands_<keyword> file.

Edit the cluster-specific hpc commands_<keyword> file to reference the custom commands.

Provide cluster-specific script\code\commands that perform the custom actions and return the required RSM output.

Once you have completed the steps above, you can create a configuration in RSM that will use your customized files.

The following sections discuss the steps to customize your integration:

You may also want to refer to the Configuring Custom Client-Side Integration tutorial on the Ansys Help site.

As part of the setup, you must create an hpc_commands file that includes your custom cluster “keyword” in its file name. This is an .xml file that contains the definition of the HPC commands to be used for the job execution. As a starting point, you can create a copy of one of the sample hpc_commands files that are included in the RSM installation. You may also want to create copies of sample command scripts to be used for job execution.

For this example we will use the sample file marked with the suffix CIS (Client Integration Sample), which is based on integration with an LSF cluster.

Note that all the actions listed below should be performed on the client machine.

Using the RSM installation on your client machine, locate the directory [RSMInstall]\Config\xml.

Locate the sample file for command execution hpc_commands_CIS.xml.

Copy the content of the hpc_commands_CIS.xml file into a new file, hpc_commands_<YOURKEYWORD>.xml. If, for example, your keyword is “

CUS_LSF”, the new file should be called hpc_commands_CUS_LSF.xml.

The client-side integration requires a custom implementation to be provided for all the commands to be executed on the cluster. The standard RSM installation includes sample scripts for all these commands, which should be used as a starting point for the customization. The sample scripts are named submitGeneric.py, cancelGeneric.py, statusGeneric.py, scp.py, and cleanupSSH.py. They are located in the [RSMInstall]\RSM\Config\scripts directory.

While it is not absolutely necessary to create a copy and rename the scripts, we have done so for consistency; in the rest of the example, it is assumed that they have been copied and renamed to add the same keyword chosen for the custom cluster (for example, submit_CUS_LSF.py, cancel_CUS_LSF.py, status_CUS_LSF.py, and cleanup_CUS_LSF.py). These scripts will have to be included in the custom job template, as shown in the following section, Modifying the Job Configuration File for a New Cluster Type.

These scripts are actually sample scripts that use a fully custom client integration on a standard LSF cluster, for example only. Generally, custom client integrations do not use standard cluster types, and thus there are no samples for custom client integrations on other cluster types.

Note: Any additional custom code that you want to provide as part of the customization should also be located in the [RSMInstall]\RSM\Config\scripts directory corresponding to your local (client) installation. Alternatively, a full path to the script must be provided along with the name.

As part of the setup, you must add an entry for your custom cluster “keyword” in the jobConfiguration.xml file, and reference the files that are needed for that cluster job type.

Note that all the actions listed below should be performed on your client machine.

Locate the directory [Ansys 2024 R2 Install]/RSM/Config/xml.

Open the jobConfiguration.xml file and add an entry that follows the pattern shown in the sample code below. This code corresponds to the example in preceding sections which assumes your cluster is most like an LSF cluster.

Note: In our example we have been using “CUS_LSF” as the keyword, but you still must replace “YOURKEYWORD” with the actual custom cluster keyword you have defined.

<keyword name="YOURKEYWORD">

<hpcCommands name="hpc_commands_YOURKEYWORD.xml"/>

</keyword>The cluster-specific HPC commands file is the configuration file used to specify the commands that will be used in the cluster integration. The file is in xml format and is located in the [RSMInstall]\RSM\Config\xml directory.

This section provides an example of a modified file hpc_commands_CUS_LSF.xml. The cluster commands are provided by the sample scripts to which the previous section refers. These scripts have been copied from the samples provided in the RSM installation and renamed to match the keyword chosen to the custom cluster.

This example script is set up to be run on a modified LSF cluster. If you are running on a different cluster type, you will need to choose a different parsing script (or write a new one) depending on the cluster type that you have chosen. Parsing scripts are available for supported cluster types: LSF, PBS Pro, SLURM, Altair Grid Engine (UGE), and MSCC. They are named lsfParsing.py, pbsParsing.py, ugeParsing.py, and msccParsing.py respectively. If you are using an unsupported cluster type, you will need to write your own parsing script. For details refer to Parsing of the Commands Output.

The hpc_commands file provides the information on how commands or queries related to job execution are executed. The file can also refer to a number of environment variables. Details on how to provide custom commands, as well as the description of the environment variables, are provided in Writing Custom Code for RSM Integration.

<jobCommands version="3" name="Custom Cluster Commands">

<environment>

<env name="RSM_HPC_PARSE">LSF</env>

<env name="RSM_HPC_PARSE_MARKER">START</env> <!-- Find "START" line before parsing according to

parse type -->

<env name="RSM_HPC_SSH_MODE">ON</env>

<env name="RSM_HPC_CLUSTER_TARGET_PLATFORM">Linux</env>

<!-- Still need to set RSM_HPC_PLATFORM=linx64 on Local Machine -->

</environment>

<submit>

<primaryCommand name="submit">

<properties>

<property name="MustRemainLocal">true</property>

</properties>

<application>

<pythonapp>%RSM_HPC_SCRIPTS_DIRECTORY_LOCAL%/submit_CUS_LSF.py</pythonapp>

</application>

<arguments>

</arguments>

</primaryCommand>

<postcommands>

<command name="parseSubmit">

<properties>

<property name="MustRemainLocal">true</property>

</properties>

<application>

<pythonapp>%RSM_HPC_SCRIPTS_DIRECTORY_LOCAL%/lsfParsing.py</pythonapp>

</application>

<arguments>

<arg>-submit</arg>

<arg>

<value>%RSM_HPC_PARSE_MARKER%</value>

<condition>

<env name="RSM_HPC_PARSE_MARKER">ANY_VALUE</env>

</condition>

</arg>

</arguments>

<outputs>

<variableName>RSM_HPC_OUTPUT_JOBID</variableName>

</outputs>

</command>

</postcommands>

</submit>

<queryStatus>

<primaryCommand name="queryStatus">

<properties>

<property name="MustRemainLocal">true</property>

</properties>

<application>

<pythonapp>%RSM_HPC_SCRIPTS_DIRECTORY_LOCAL%/status_CUS_LSF.py</pythonapp>

</application>

<arguments>

</arguments>

</primaryCommand>

<postcommands>

<command name="parseStatus">

<properties>

<property name="MustRemainLocal">true</property>

</properties>

<application>

<pythonapp>%RSM_HPC_SCRIPTS_DIRECTORY_LOCAL%/lsfParsing.py</pythonapp>

</application>

<arguments>

<arg>-status</arg>

<arg>

<value>%RSM_HPC_PARSE_MARKER%</value>

<condition>

<env name="RSM_HPC_PARSE_MARKER">ANY_VALUE</env>

</condition>

</arg>

</arguments>

<outputs>

<variableName>RSM_HPC_OUTPUT_STATUS</variableName>

</outputs>

</command>

</postcommands>

</queryStatus>

<cancel>

<primaryCommand name="cancel">

<properties>

<property name="MustRemainLocal">true</property>

</properties>

<application>

<pythonapp>%RSM_HPC_SCRIPTS_DIRECTORY_LOCAL%/cancel_CUS_LSF.py</pythonapp>

</application>

<arguments>

</arguments>

</primaryCommand>

</cancel>

</jobCommands>

Note: Any custom code that you want to provide as part of the customization should also be located in the [RSMInstall]\RSM\Config\scripts directory corresponding to your local (client) installation. Alternatively, a full path to the script must be provided along with the name.

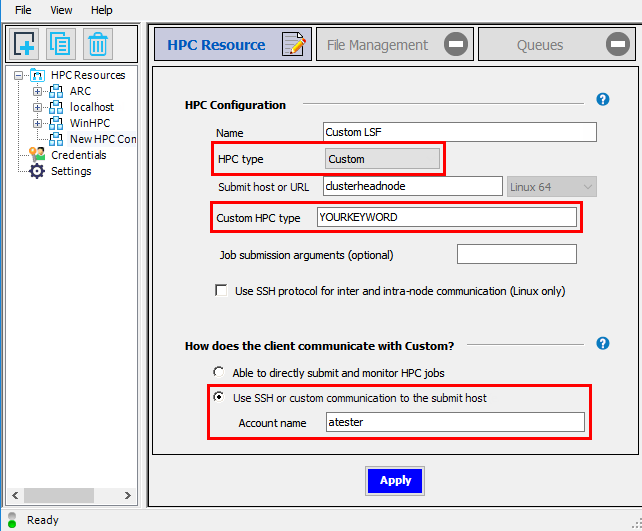

When creating a configuration for a custom cluster in RSM, you must set the

HPC type to , and specify your

custom cluster keyword in the Custom HPC type field. (For our

example, we would enter CUS_LSF in the Custom HPC

type field.)

A “custom client integration” means that you are running in SSH mode (or non-RSM communication). Thus, when specifying how the client communicates with the cluster, you need to select Use SSH or custom communication to the submit host, and specify the account name that the Windows RSM client will use to access the remote Linux submit host.

For the File Management tab, see Specifying File Management Properties for details on the different file transfer scenarios.