Software Overview

Ansys Sound: Active Sound Design for Electric Vehicles, also referred to as Ansys Sound: ASDforEV or ASDforEV, is a software solution for sound design in electric vehicles or, more generally, in quiet vehicles such as recent HEV, PHEV or ICE vehicles.

As an acoustic demonstrator in real driving conditions, ASDforEV helps manufacturers and sound designers to create several types of sounds, such as AVAS (Acoustic Vehicle Alerting System) sounds which are now mandatory for silent vehicles to warn pedestrians.

Usable at any stage of the conception of the vehicle, ASDforEV enables you to create a specific audio signature for your brand.

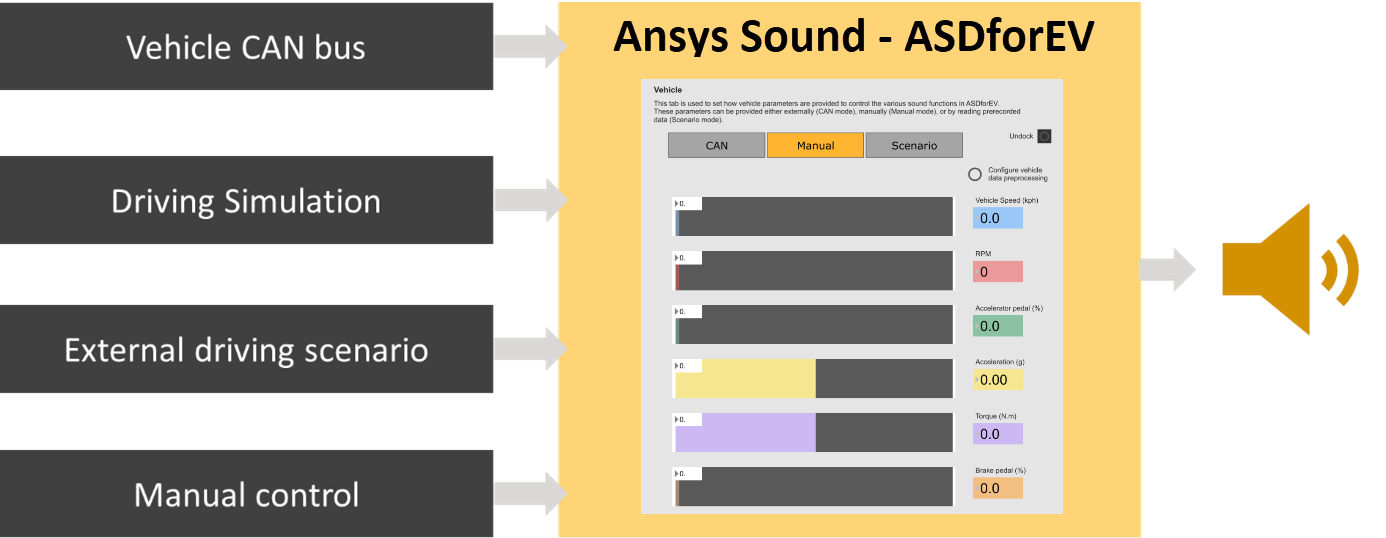

- in a vehicle, using the vehicle’s CAN-bus (or other vehicle bus systems such as FlexRay)

- in a driving simulator

- with prerecorded driving scenario data

- with manual controls of the software Graphical User Interface

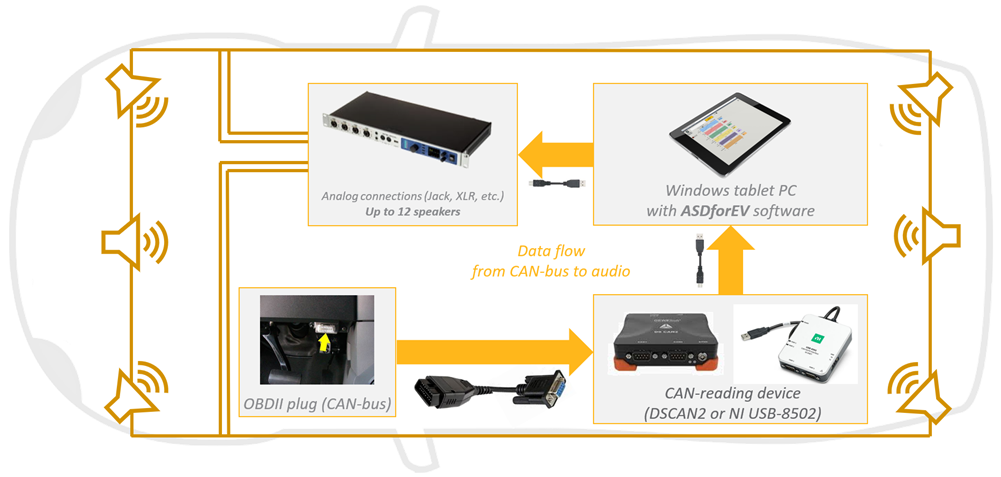

The workflow highly depends on the usage mode you are considering. Below is detailed, as an example, the workflow in the case where the software is used in a vehicle, with a specific CAN-bus reading device. See the dedicated sections for other use modes of the software.

- Connect and configure the audio output to the vehicle’s audio systems and/or speakers

- Connect and configure the reception of driving parameters from the vehicle’s CAN-bus (using either a DS-CAN2 or NI USB-8502 device)

- Load and configure your designed sounds and parameters, such as gains and laws

- Demonstrate and compare the configured design(s)

The first two steps of the workflow allow you to set up the dataflow between the vehicle parameters and the sound produced.