Ansys Cloud Direct Support for HPC Job Management

Ansys Electromagnetics Desktop supports the Ansys Cloud Direct service that allows quick and easy access to cloud-based HPC resources. The service is accessible through both the Simulation Ribbon and Tools > Job Management.

For this release, you can submit HFSS, HFSS 3D Layout, Icepak, Maxwell 3D, Maxwell 2D, Mechanical, Q3D Extractor, and 2D Extractor jobs, monitor their progress, and download and post process the results. Other solvers are either in Beta or are not yet supported for Ansys Cloud Direct.

Uploads to and downloads from Ansys Cloud Direct use archives to improve speed. The Project browser shows both .aedt and .aedtz (archive) files. If you submit an unarchived project, an archive will be automatically created for upload. When the job completes, the results are packaged into an archive. When the download is initiated, the repackaged archive file is transferred to the download folder along with the other job-related files, such as the log.

For single setups, you can use multi-step submission. This separates the resource specification and reporting as appropriate for each stage of the solve.

Prerequisites for Using Ansys Cloud Direct with Ansys Electronics Desktop

- You will need an "Ansys Cloud Compute Essentials" subscription associated with your Ansys Account. The Ansys Account is an extension of the Ansys Discovery account that includes those products and services that require sign-in, such as the AppStore, Cloud Compute and others. Contact your account manager or service representative to obtain a subscription invitation.

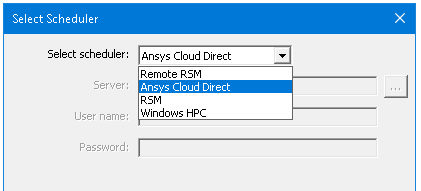

- You must select Ansys Cloud as your Job Management Scheduler, and, if necessary, log in to the Ansys Cloud Service using the Ansys Discovery Account and associated password.

Job Management UI for Ansys Cloud Direct

You can use Ansys Electronics Desktop to submit batch jobs to Ansys Cloud Direct and monitor those jobs.

This involves the following steps:

- Use Tools > Job Management > Select Scheduler

to select Ansys Cloud Direct as the scheduler.

- Click Log in to launch a login window for Ansys Cloud Direct.

After a successful login, you may not need to log in again for several days if you do not log out.

If you wish to log out, click Log out from the Select Scheduler window.

- Use Tools > Job Management > Submit Job

to submit a batch job to Ansys Cloud Direct.

Ansys Cloud Direct-specific Settings:

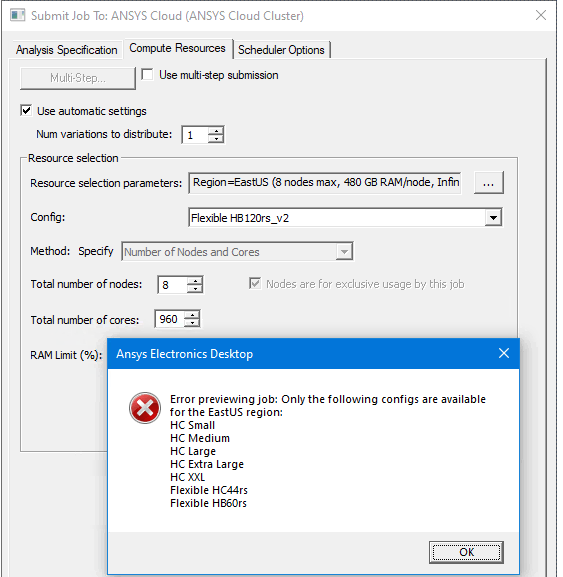

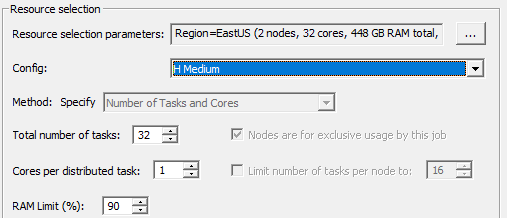

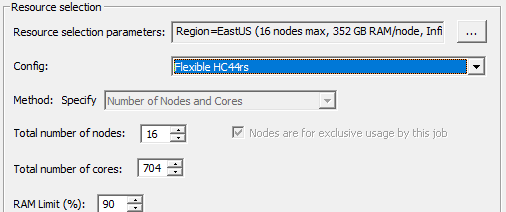

- On the Compute Resources tab, Resource Selection Parameters

will allow you to select a Region (for example, EastUS, JapanEast, WestEurope). The Config drop-down menu does not display configurations that are not available in any of the selectable regions. That is, if the regions are EastUS, WestEurope, and SouthwestUS, and none of these regions have the smallhc-based queues, then the "HC Small" configuration does not appear. If you select a resource that is not available in the specific region you select, an error messages shows the available choices for that region.

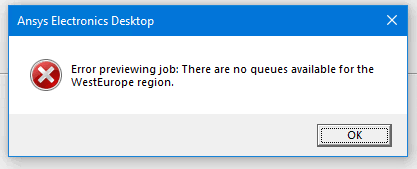

If no queues are available, an error message provides that message:

- On the Compute Resources tab, select a Config size and style (Flexible or fixed) based on the following:

- Small: 16 cores, 1 node, 224 GB RAM total

- Medium: 32 cores, 2 nodes, 448 GB RAM total, Infiniband

- Large: 128 cores, 8 nodes, 1792 GB RAM total, Infiniband

- Extra Large: 256 cores, 16 nodes, 3584 GB RAM total, Infiniband

- XXL: Even larger.

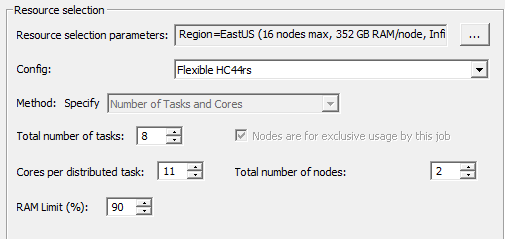

- Flexible: Flexible queues permit the following resource selection methods: Automatic Number of Nodes and Cores, Manual Number of Tasks and Cores. If you select a Flexible queue for manual Number of Tasks and Cores, you will see a new item in Resource Selection for "Total number of nodes" replacing the "Limit number of tasks per node to" option that appears for fixed queues.

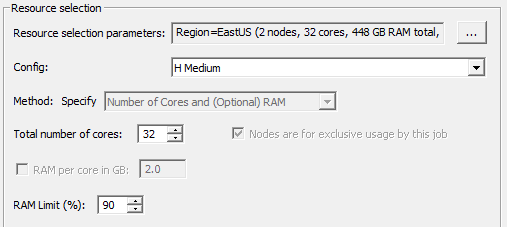

In comparison, if you selected a fixed configuration, the Resource selection panel appears like this:

Important:

Important:Attempts to use more for submission will result in error messages.

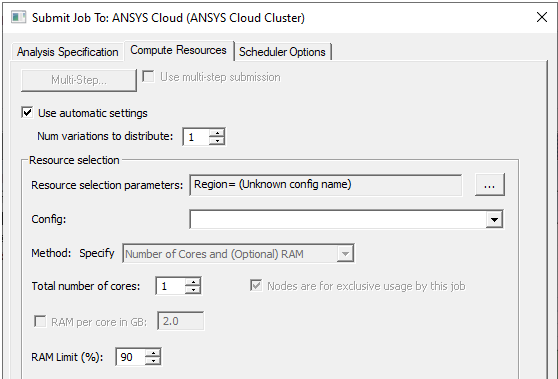

- On the Compute Resources tab, if you select Use automatic

settings with Num variations to distribute set to 1, Optimetrics variations will be

solved sequentially. Other distribution types will be distributed automatically. If you set Num

variations to distribute to 2 or more, Optimetrics variations will be solved in parallel. Other

distribution types will be distributed automatically.

- In response to a set of minimal constraints, Ansys Cloud Direct scheduler may increase the resources assigned beyond the minimal values in order to meet the full set of requirements. For example, if you specify 7 distributed engines with two processors per engine and also limit the number of engines per node to 4, the scheduler may increase the number of cores used in order to meet the limit specified for engines per node. Click Preview Submission to see the number of resources assigned, and that the scheduler-generated code includes an MPI specification.

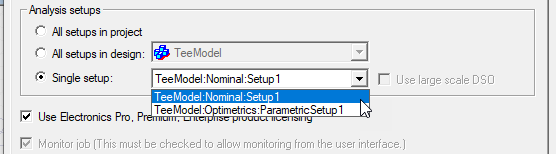

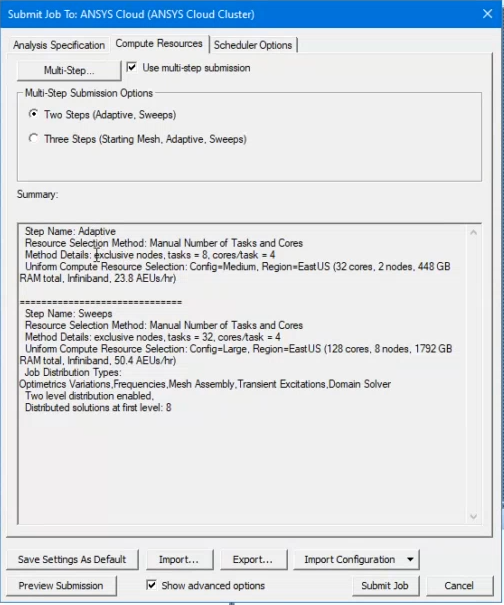

- To use Multi-Step Submission on Ansys Cloud Direct, you must specify a single setup on the Analysis Specification tab.

This enables Multi-Step options on the Compute Resources tab.

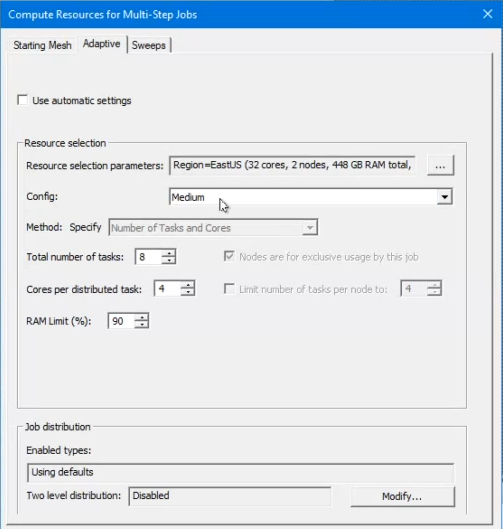

Click the Multi-Step button to open the Compute Resources for Multi-Step Jobs dialog box. It has tabs for Starting Mesh, Adaptive, and Sweeps.

On the Adaptive tab, you can Use automatic settings, or specify Resource Selection

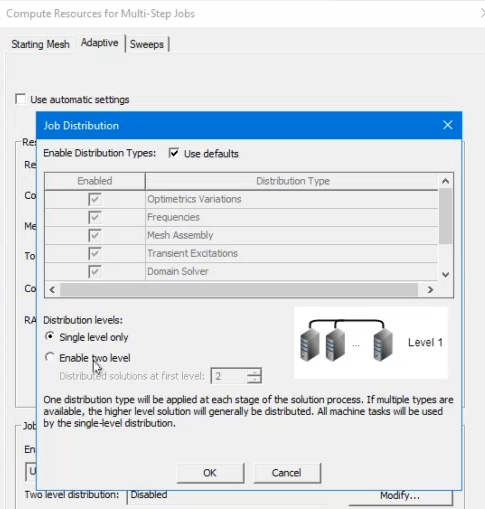

Click the Modify... button on the Adaptive tab to open the Job Distribution dialog box.

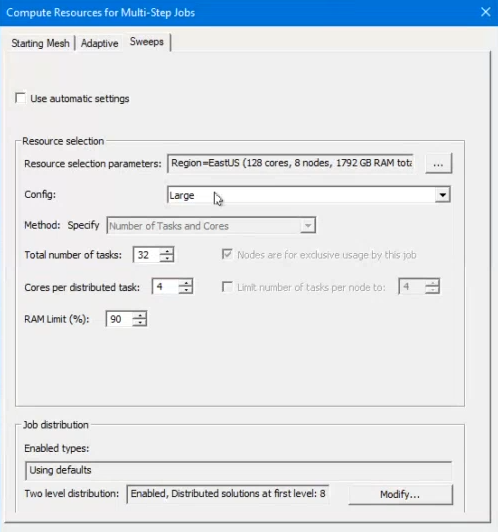

The Sweeps tab lets you specify resources or Use automatic settings.

The Sweeps tab includes an independent Modify... button to specify Job Distribution.

Select automatic settings, or specify different resources for each step. When a job is finished, that is, on the last step, reports update and traces are extracted into CSV files. If select fixed queue for automatic sections, the Resource selection panel only allows cores and has RAM with RAM disabled:

If you select flexible queue for automatic settings, The Resource selection panel only allows nodes and cores for a flexible queue:

- On the Compute Resources tab, Resource Selection Parameters

will allow you to select a Region (for example, EastUS, JapanEast, WestEurope). The Config drop-down menu does not display configurations that are not available in any of the selectable regions. That is, if the regions are EastUS, WestEurope, and SouthwestUS, and none of these regions have the smallhc-based queues, then the "HC Small" configuration does not appear. If you select a resource that is not available in the specific region you select, an error messages shows the available choices for that region.

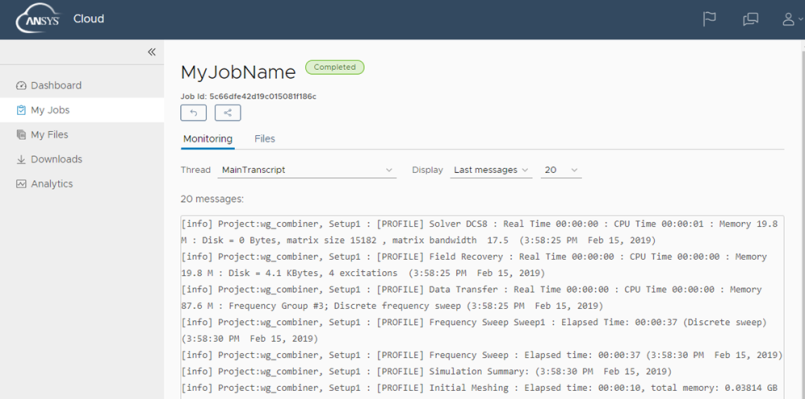

- Use Tools > Job Management > Monitor Job to monitor the job's progress.

- You will receive an email when the job has started, and another when it has finished.

- Use the link in the email (or the Portal button on the job monitoring window)

to launch the Ansys Cloud Portal and view detailed results:

Environment Variable Settings for Ansys Cloud Direct

When submitting jobs to Ansys Cloud Direct from Electronics Desktop, certain environment variables and batchoption settings must be made to ensure proper operation on the cloud. These settings are done automatically through the job submission window.

These environment variable settings will override any attempts from the user to set the same environment variables:

- ANS_NODEPCHECK=1

- ANSYSEM_FIX_REVERSE_LOOKUP=1

- ANSYSEM_DESKTOP_SUBNET_FROM_COMENGINE_ADDR=1

The environment variable ANSYSEM_ENV_VARS_TO_PASS contains a semicolon-separated list of filters for environment variables to be passed from ansysedt.exe to COM engines. You are allowed to set this environment variable. After being prepared for the job it will have the following filters set at minimum (with the user-requested filters following these):

ANSOFT_*;ANS_*;ANSYSEM_*;ANSYSLMD_LICENSE_*;I_MPI*

Troubleshooting and Debugging Environment Variables

To keep downloaded files that help with troubleshooting, enable the environment variable ANSYS_EM_PRESERVE_DOWNLOAD_FILES.

Debug logs (and other files generated in the working directory) from remote nodes are automatically collected.

Option Settings for Ansys Cloud Direct

The following option is automatically added for Ansys Cloud Direct jobs:

- -autoextract

- For single step jobs it will include "-autoextract reports".

- For multi-step jobs, the last step will include "-autoextract reports" but the first steps will only include "-autoextract" (no reports). Reports are only extracted on the last step.

See: Running Electronics Desktop from a Command Line.

Automatic Batchoption Settings

The license type batch option ("HPCLicenseType") is forced to be "Pack" because Cloud is configured to only work with pack licensing. The following MPI batchoptions are automatically set as "Intel" because Ansys Cloud Direct is configured to only work with Intel MPI.

- HFSS 3D Layout Design/MPIVendor

- HFSS/MPIVendor

- Icepak/MPIVendor

- Maxwell 2D/MPIVendor

- Maxwell 3D/MPIVendor

- Q3D Extractor/MPIVendor