Known Issues and Limitations

Configuration and Access

Only one AWS account can be associated with Ansys Gateway powered by AWS.

If an AWS account has a non-default region enabled, Express setup will fail. To see which regions are enabled by default, go to the AWS STS Regions and endpoints table in the AWS documentation and review the 'Active by default' column. (871255)

When setting up Ansys Gateway powered by AWS using the Manual setup option, the following issues and limitations apply:

When running the install.ps1 script for the AD Connector service using a valid certificate, the installer may incorrectly report that the certificate is expired, even though it is not expired. The following error is displayed in the output: "Certificate is expired. Renew your certificate and retry the process." As a result, the AD Connector service is not installed successfully. (1376259)

If you install the AD Connector service with an expired certificate, no error or warning is displayed, neither during the setup process nor in the Ansys Gateway powered by AWS environment after setup has been completed. (1324704)

If Ansys Gateway powered by AWS was set up using the Manual setup option, and an administrator attempts to add a user locally on the page using an email address that is associated with a User Principal Name or Mail field in Active Directory, a 'User successfully added" message is displayed, but the user is not actually added to the Users list. Adding a user locally using an email address that already exists in Active Directory is not supported. (1211724)

When a domain is associated with a tenant that was set up using the Manual setup option (Tenant1), and the same domain is associated as a secondary domain with another tenant that was set up using the Express setup option (Tenant2), any user who is not part of the Active Directory of Tenant1 will not be able to sign in to either tenant. In this scenario, when such a user attempts to sign in, an "Active Directory Membership Required" error is displayed. Even though the user is eligible to sign in to Tenant2, the tenant selector is not displayed due to the Active Directory issue on Tenant1, preventing the user from accessing either of the tenants. (934167)

When configuring custom IT policy extensions, parameter attributes are not supported for Windows PowerShell (.ps1) scripts. When defining parameters in the parameter list, include only the parameter names (and types, if needed) without any attributes. (1359154)

Licensing for Ansys Applications

If you enable the 'Use custom licensing settings' option when creating a virtual desktop or autoscaling cluster, but do not specify any custom licensing settings, the resource is configured to use global licensing settings even though you chose not to use global licensing settings. (1354059)

When viewing resource details, licensing information is only displayed if you are a tenant administrator. (1362018)

Convert to OS Image

If the limit on a project space budget is reached during the conversion of a virtual desktop to an OS image, no warning is displayed, and the conversion is allowed to complete. Since the virtual desktop must remain running during the conversion process, a budget overrun may occur. (1318739)

Virtual Desktops

When creation of a virtual desktop with GPUs fails due to insufficient capacity in the selected availability zone, the message in the error log incorrectly states that you can get capacity by not specifying an availability zone. When creating a virtual desktop, selection of an availability zone is required. Additionally, if the message suggests an alternate availability zone, that does not mean that capacity is guaranteed in that zone at that time. Capacity varies across zones, and there may be limited availability of GPU-enabled instance types at that time. (1305153)

When creating a virtual desktop using a custom operating system (OS) image (one that was created by converting an existing virtual desktop to an OS image), the following limitations apply:

If the custom image includes an NVIDIA GPU driver (GRID or Tesla), it is not possible to install a different GPU driver. You must choose an instance type whose GPU type is compatible with the GPU driver type being installed with the image. Or, you can choose an instance type that does not have GPUs. In this case, the virtual desktop will function but will not make use of the GPU driver as the machine has no GPUs.

To determine whether a custom image has a GPU driver installed, and which type of driver is installed, you can check the image's properties on the page.

If the custom image does not include a GPU driver, it is not possible to install one on the virtual desktop. If you select an instance type with GPUs, the GPU driver options are inadvertently displayed. If you specify that you want to install a GPU driver, it will not get installed. (1357031, 1359497)

To create a virtual desktop with a GPU driver, you must select a default OS image (such as Windows Server 2022 or Rocky Linux 8.10 by CIQ), or select a custom OS image that includes a GPU driver.

If the custom image contains a data disk that was available on the original virtual desktop used to create the image, the Configure storage page of the virtual desktop creation wizard lets you freely define another data disk and does not notify you that a data disk already exists in the selected image. If you do define another data disk (instead of selecting 'none'), the virtual desktop will have two data disks. (1305450)

When applications fail to install due to lack of disk space, their status is incorrectly shown as 'Installed' in the virtual desktop details. (1289128)

When creating a Windows virtual desktop with applications selected for installation, and a Windows update is triggered on the newly created virtual machine, application installation may fail. (975861, 1318356)

To avoid this issue, Ansys recommends that you create the virtual desktop without any applications, and then add applications after the virtual desktop has been created. See Adding Applications to a Virtual Desktop in the User's Guide.

On a Linux virtual desktop, selecting multiple applications with GNOME Desktop as a dependency may result in conflicts when the applications are installed in parallel. (1046444)

To avoid this issue, Ansys recommends that you:

- Install one application when creating the virtual desktop and add other applications one at a time after the virtual desktop has been created.

- Install GNOME Desktop last so that the RDP connection to the Linux virtual desktop is not affected by the installation of other applications.

When the instance type used for an existing virtual desktop is deprecated or retired, the view on the Hardware tab in the virtual desktop's details becomes blank, making it impossible to change the hardware. (1268704)

Tesla drivers do not support graphics visualization. If accelerated graphics are desired, use a GRID driver.

On a Linux virtual desktop, use GNOME Desktop with the GRID driver, as KDE does not support accelerated graphics. (1198261)

On Linux virtual desktops with a CentOS operating system, the Stop virtual desktop when no user has been active for timer does not work. The virtual desktop remains in the Running state even if no user has been active for the duration of the timer. (1222506)

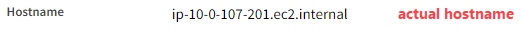

When Ansys Gateway powered by AWS has been set up using the Express setup option, the hostname displayed in a virtual machine's details may not be the actual hostname of the machine in the AWS domain. This is important if you are referencing virtual desktops in your cluster configurations or trying to connect to a virtual machine from your local machine.

A hostname in the AWS domain begins with 'ip' and has a format similar to the following:

In some scenarios, the displayed hostname starts with 'agw'. This is not the actual hostname.

Be aware of the following:

For Linux virtual desktops, the actual hostname is not displayed in the machine's settings when the virtual machine is being created (the resource is requested, starting, or waiting for services). Once the machine goes into the Running state and the Connect button is displayed, the actual hostname becomes available in the resource details and remains displayed there from that point on.

For Windows virtual desktops, the actual hostname is not displayed in the machine's settings at any time. When referencing Windows virtual desktops in your configurations, you must use the Private IP address instead of the hostname.

When connecting to a Windows virtual desktop created before November 7, 2025, you may be immediately signed out of the remote desktop session in some cases. This can occur if sign-out timers are enabled on the virtual desktop and have been previously triggered due to user inactivity. To resolve this issue, recreate the virtual desktop. (1346483)

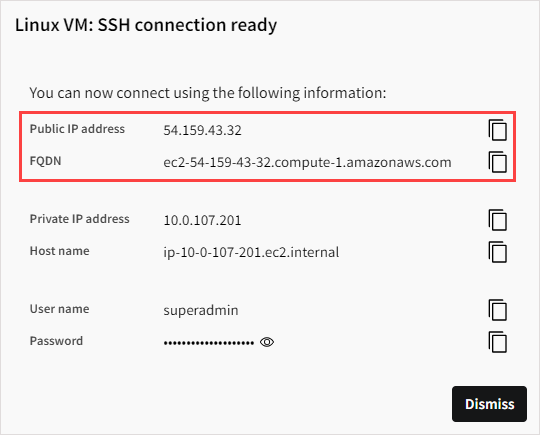

When connecting to a Linux virtual machine from another virtual machine in the same project space using SSH, using the hostname will not work. Use the Private IP address.

When connecting to a Linux virtual machine from your local machine using SSH, Ansys recommends using the Public IP address or Private IP address displayed in the connection dialog. The FQDN can also be used.

When connecting to a Linux virtual machine using an SSH private key via PowerShell or the Windows command prompt, the connection may fail with an 'invalid format' error if the version of OpenSSH being used is greater than version 9.0. (1163234)

The OpenSSH version can be determined using ssh -V.

To work around this issue, Ansys recommends using an alternate SSH client such as MobaXterm.

Windows virtual machines should have a minimum of 2 vCPUs and 8 GB RAM. To ensure quick startup and efficient operation, Ansys recommends a minimum of 4 vCPUs and 16 GB RAM. (870406)

When a virtual desktop is being created, applications may get stuck in the 'Installing' state if multiple applications were selected during setup. If this occurs (despite a sufficient disk size), Ansys recommends installing one application at a time. Delete the virtual machine and create a new one with only one application selected for installation. You can then add more applications to the virtual machine after it has been created. See Adding Applications to a Virtual Desktop in the User's Guide. (910015)

Connecting to a virtual machine from your local machine via SSH may not work if you are connected to a company VPN, as your company's security policies may block outbound SSH communication. In this case, disconnect from the VPN before trying to SSH to the virtual machine.

When a virtual machine is created, initial configuration could sometimes trigger system reboots and the machine could be in the "Installing" state for a while before a user can connect to the machine. (870406)

Occasionally, Linux virtual desktops may lag with no apparent cause. If disconnecting from and reconnecting to the virtual desktop does not solve the issue, try restarting the virtual desktop as follows:

Disconnect from the virtual desktop session.

In Ansys Gateway powered by AWS, click Stop in the virtual desktop tile.

When the virtual desktop is in the Stopped state, wait a few minutes to allow all background processes to stop.

Click Start to restart the virtual desktop.

Reconnect to the virtual desktop.

(807886)

In some cases, virtual desktop creation may fail if there are not enough available cores in the selected AWS availability zone. When selecting a hardware type in the wizard, the Available cores value that is reported is the sum of available cores across all availability zones in the AWS region. For example, the Europe-Central region has various availability zones such as Europe-Central-1a, Europe-Central-1b, and so on. Even though you select a specific availability zone when setting up a virtual desktop, the Available cores value does not report the available cores in that specific zone. The Ansys Gateway powered by AWS development team is working on a fix but there are API limitations on the AWS side. To work around this issue, try selecting a different availability zone.

Sometimes, when you create a Linux virtual machine from an OS image, the virtual machine may get stuck in the 'Installing' state. Most likely, there is an issue with the OS image. To resolve this issue, delete the virtual machine and create a new one without using the faulty OS image. To prevent future occurrences of this issue, Ansys recommends that you delete the OS image. (727238)

When a virtual desktop is in the Stopped state, the Add application action is enabled on the Applications tab even though applications cannot be added to a virtual desktop in this state. The action enables you to select an application to install, but the application is not installed. (1047876)

Autoscaling Clusters

In some cases, when Ansys HPC Platform Services (HPS) has been added to an autoscaling cluster, HPS installation may fail. The cluster remains in the Running state and cannot be stopped, potentially incurring unnecessary costs. In other cases, even when HPS is installed successfully, there may be times when the cluster cannot be stopped. Additionally, if a budget has been set for the project space, the cluster is not stopped as expected when the budget limit is reached. (1372867)

If you are unable to stop an autoscaling cluster, contact Ansys Support for assistance.

Deleting an autoscaling cluster while it is in the Running state can cause the cluster to get stuck in a Deleting Failed state. Although the head node and compute nodes are actually deleted in AWS Cloud and no longer incur costs, they continue to appear as Running in the autoscaling cluster details in Ansys Gateway powered by AWS, leading to potential confusion and discrepancies in cost reporting and billing. To avoid this issue, always stop an autoscaling cluster before deleting it. (1331874)

In some cases, if you stop an autoscaling cluster and immediately attempt to delete it, the deletion may fail, especially if the cluster uses large instance types. Although the cluster appears to be Stopped in Ansys Gateway powered by AWS, the cluster nodes may still be in the Terminating state in AWS Cloud, preventing the cluster from getting deleted. Before deleting a cluster, Ansys recommends that you wait for a period of time after stopping the cluster and ensure that all nodes are in the Terminated state. (1341726)

When Ansys Gateway powered by AWS is configured to allow public IP addresses to be issued for virtual machines, autoscaling cluster creation will fail if any defined queues specify instance types with multiple network interfaces. Public IP addresses can only be assigned to virtual machines with a single network interface. (1232283)

To work around this issue, you have two options:

Disable the generation of public IP addresses. See Allowing or Preventing the Generation of Public IP Addresses for Virtual Machines in the Administration Guide.

If keeping the generation of public IP addresses enabled, choose only instance types with single network interfaces when defining queues. For a list of instance types to avoid, see the Network cards topic in the AWS documentation.

When defining a queue for an autoscaling cluster, entering the name of a previously deleted queue is not permitted. (1300616)

If the default hardware is not available for an autoscaling cluster, the VM Size field under the Ansys HPC Platform Services (HPS) option Create a new VM with Ansys HPC Platform Services is empty. The user cannot submit the autoscaling cluster form and the following error message is displayed:

Some fields within this form are incomplete. Please address them before submitting.

The workaround to resolve it is:

- Go to the HPS selection card.

- Click Create HPS on Separate VM.

- Resolve the error. In this case, select a hardware.

- Click Install HPS on Head Node. Make sure all the inputs are defined.

- Click Create Autoscaling Cluster.

(1384509)

Hardware

When selecting hardware for a resource, instance types that are not supported in the specified Availability Zone can be selected, resulting in resource creation failure. (1195704)

p3, p4, and p5 instances are not supported for Linux virtual desktops or clusters. (949142, 971642)

Metal instance sizes (for example, c6i.metal) are not supported or recommended.

Ansys Electronics Desktop

In some cases, when submitting an Ansys Electronics Desktop 2052 R2 job to an autoscaling cluster via HPC Platform Services, the project may crash if the design generates any type of messages in the messages window when the design is being read. The most common cause of this crash is the presence of UDPs in the design. If possible, clean up the design to prevent the generation of messages (for example, remove UDPs). (1288563)

Running an Icepak simulation on an Ansys Electronics Desktop 2025 R2 autoscaling cluster results in an "Unable to locate or start COM engine" error. (1289670)

When an Icepak simulation that specifies the use of more than one compute node is submitted to an Ansys Electronics Desktop 2025 R1 autoscaling cluster, Icepak fails to run. (1214623)

In virtual desktop sessions, Ansys Electronics Desktop can only be run on Windows-based virtual desktops.

When creating and connecting to an Ansys Electronics Desktop cluster, the 'Setting up Cluster' and command prompt windows remain displayed even after the Submit Job dialog appears. Do not close these windows as this will also close the Submit Job dialog. Instead, minimize the windows or move them to another area of the screen.

In cluster workflows, Ansys Electronics Desktop is not intended to be run graphically on a Linux machine in Ansys Gateway powered by AWS. To run AEDT graphically in Ansys Gateway powered by AWS, use a Windows virtual desktop.

If Ansys Electronics Desktop is going to be installed along with other applications intended to be used graphically on the same VM (through RDP or VNC), then the packages should be installed in this order with the XRDP and Gnome packages included:

- Ansys Electronics Desktop

- XRDP for GNOME

- Gnome Desktop (latest version)

- Other application(s) that use RDP or VNC

If a Linux interface is required for a workflow that involves both Ansys Electronics Desktop (AEDT) and optiSLang, use the optiSLang interface instead of running AEDT graphically.

Ansys Fluids

General

As the multiport Open MPI does not support libfabric Elastic Fabric Adapter (EFA), using Open MPI to run Fluent or CFX with EFA on a Fluids autoscaling cluster does not work. Such jobs will run, but not at EFA speeds.

For any type of work requiring accelerated graphics, an instance with GPUs and GRID driver must be used for best results. Using an instance with CPUs or Tesla driver may result in graphical issues. (1249256)

In some cases, CFX, TurboGrid, or CFD-Post applications may display a blank or empty viewer. To resolve this, try setting the environment variable QT_OPENGL=desktop on the virtual machine. Similarly, if the variable is already assigned to another value, consider changing it to the aforementioned value. For more details, see The Ansys Workbench Interface in the TurboGrid documentation.

Note: The recommended fix is contrary to the fix recommended for the following issue in Known Limitations in Fluent Icing 2025 R2 and should be kept in mind:Fluent Icing may hang or crash on startup on cloud or virtual machines if the hardware graphics rendering is unsupported. A workaround is to set the QT_OPENGL=software environment variable before launching to force software rendering.

- Ansys Gateway powered by AWS does not support Slurm accounting. As a result:

- When submitting a Fluent job to a Slurm cluster using the Fluent Launcher, a "WARNING: SLURM account not provided, using default" message is displayed in the console. This warning can be ignored.

- Upon completion of a CFX solution, there is a delay before the Solution Complete dialog appears. This is due to CFX running a Slurm accounting check (that eventually times out).

Ansys Fluent

Submitting Fluent jobs to a Fluids autoscaling cluster via HPC Platform Services is supported for Fluent version 2025 R2 only.

This workflow has shown instability in some cases. Use at your discretion.

Using Fluent for graphics visualization on a Linux virtual desktop with a Tesla driver and GNOME Desktop may result in display issues. (1198261)

Tesla drivers should not be used for graphics visualization. If accelerated graphics are desired, use a GRID driver.

On a Linux virtual desktop, use GNOME Desktop with the GRID driver, as KDE does not support accelerated graphics.

Sometimes, when using Ansys Fluent to interact with a Fluids Slurm cluster, the Fluent interface may get stuck. To resolve this issue, try restarting the Slurm controller and cluster. (851798)

Some simulation reports may not be generated properly in Ansys Fluent. The virtual machine must have a GPU and GRID driver. (634828/974826)

Running Ansys Fluent with the X11 graphics driver on a Linux virtual desktop with KDE Desktop Environment is not supported. The graphics window cannot be properly rendered. (725917)

Ansys CFX

For CFX, Open MPI does not work. Use Intel MPI. (1139796)

If you encounter issues using Intel MPI, see Ansys Fluent and CFX: Workarounds for Intel MPI-Related Issues in the Recommended Usage Guide.

For successful CFX interactive job submissions in Solver Manager to a Slurm cluster, the head node may need to be manually reconfigured in the following situations:

- The cluster queue information has changed due to the addition or removal of partitions

- The application installation (on a previously created storage) is reused for a new cluster

- The head node hostname/IP address has changed after the cluster has been shut down for an extended period

To reconfigure the head node:

As root from the head node, delete the hostinfo file (/mnt/<SHARED_STORAGE>/ansys_inc/v<###>/CFX/config/hostinfo.ccl).

As root from the head node, run:

/mnt/<SHARED_STORAGE>/ansys_inc/v<###>/CFX/bin/cfx5parhosts -add-slurm <HEAD_NODE_HOSTNAME>

Ansys EnSight

When using KDE desktop environment, changing the Number of Servers value in the EnSight launcher window causes the following error to be displayed:

Could not create collator: 4This is due to QT 5.15 issues on KDE but can safely be ignored.

When running EnSight on a Linux virtual desktop, transferring files from an external shared drive on an Isilon storage system may be problematic depending on the data format. To avoid or work around this issue, transfer files directly to the virtual desktop and/or an AWS file storage server with shared NFS storage.

Ansys Dynamic Reporting

When using Ansys Fluids 2025 R2 on a virtual desktop with a non-NVIDIA GPU driver, the Ansys Dynamic Reporting (ADR) template editor fails to load 3D interactive geometry. (1300685)

Workarounds:

On a Windows virtual desktop, use Edge or Firefox.

On a Linux virtual desktop, use Firefox and perform the following steps:

In Firefox, go to about:config and accept the warning.

Find webgl.force-enabled and set it to true.

When connected to a Windows virtual desktop with Ansys Fluids 2025 R1 installed, the Ansys Dynamic Reporting (ADR) template editor fails to start. This issue does not occur when using 2025 R2. (1185795)

Ansys FreeFlow

When using an instance type with GPUs, only GRID drivers are recommended for accelerated graphics. For GPU compute, both GRID and Tesla drivers are suitable.

Ansys Granta

When creating a virtual desktop with Granta MI Pro, the Granta MI Pro application will not install if there any pending system restarts. You must create a virtual desktop without adding applications, restart the virtual desktop, and then add the Granta MI Pro application.

Ansys HPC Platform Services

For autoscaling clusters created between November 7 and November 24, 2025, which use global licensing settings or custom overrides to global settings, jobs submitted to Ansys HPC Platform Services may fail with a 'licensing pool' error. (1297377)

For example:

Ansys Electronics Desktop: Request name elec_solve_hfss does not exist in the licensing pool

Ansys Mechanical: ANSYS LICENSE MANAGER ERROR: Request name ansys does not exist in the licensing pool

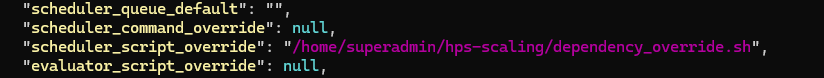

To work around this issue for an existing cluster:

Connect to the node where HPC Platform Services is installed.

Edit the ~/.ansys/rep/scaling/scaling_config.json file.

Override the "scheduler_script_override" value as shown below.

Note: This issue is fixed in Revision 98 of Ansys HPC Platform Services (the revision used for autoscaling cluster creation as of November 24, 2025).Submitting Ansys Fluent jobs to a Fluids autoscaling cluster via HPC Platform Services is supported for Fluent version 2025 R2 only.

This workflow has shown instability in some cases. Use at your discretion.

Ansys HPC Ultimate licensing is currently not available via the HPC Manager web app.

Live monitoring of output files during a job run may not work in the Ansys HPC Manager web app.

To resolve this issue, try connecting to the cluster node where Ansys HPC Platform Services is installed and restarting the HPS service:

sudo systemctl restart hps.serviceUnmounting a storage from a project space unmounts the storage from virtual machines where Ansys HPC Platform Services is installed. As a result, any jobs submitted to Ansys HPC Platform Services will fail to get evaluated unless the storage is remounted. (1245890)

If an autoscaling cluster has multiple queues, and it does not have a default queue specified, a submitted job may remain stuck in the Pending state. To resolve this issue, following the instructions in Specifying a Default Queue for an Autoscaling Cluster (HPS Workflows) in the Recommended Usage Guide.

Occasionally, some result files may be missing when you try to download results from HPS. This issue could manifest itself in different ways, such as input files missing in the working directory during a job run. (1040261)

For a workaround, see the troubleshooting topic, When results are downloaded from Ansys HPC Platform Services, some files are missing.

See also: Troubleshooting Autoscaling Cluster Workflows that use Ansys HPC Platform Services (HPS)

Ansys Mechanical/Workbench

When submitting jobs from Mechanical on a newly created Windows virtual desktop to a Mechanical autoscaling cluster with Ansys HPC Platform Services (HPS), upload and download speeds between the virtual desktop and HPS may be noticeably poor. To resolve or prevent this issue, reboot the virtual machine after it has been created. (1295398)

Occasionally, design point updates submitted from Ansys Workbench 2025 R1 to Ansys HPC Platform Services (HPS) may fail with the following error: "HPS DotNet Client HPS DotNet Client Authorization fail: Invalid refresh token". (1237408)

When a Workbench project requiring a geometry update in Discovery is submitted to a Mechanical autoscaling cluster, the job may fail. (1219948)

Likely cause: The Ansys Product Improvement Program may be preventing Discovery from running.

Workaround: Open Discovery on any machine and close the Ansys Product Improvement Program dialog. This only needs to be done one time.

Multi-node linked analyses submitted to an autoscaling cluster do not complete successfully when the cluster is configured to use local scratch (enabled by default for Mechanical APDL clusters) and compute nodes have a local scratch drive. (1214529)

To avoid or resolve this issue you can use any of the following workarounds:

Select an instance that does not have a local scratch drive (a network drive will be used instead)

Set Local Scratch to false for the Ansys Mechanical APDL application in the cluster properties (using Ansys HPC Manager)

Solve on one node only

Solve each analysis separately (solve the upstream analysis first, then after it finishes, solve the downstream analyses, one at a time)

If an error occurs after a job is submitted from Workbench to an Ansys Mechanical autoscaling cluster via Ansys HPC Platform Services (for example, there is an issue with the solution or no license is available), an error message is displayed in Workbench, preventing the job process from continuing. The job will remain stuck in this state until the maximum job runtime is reached.

To prevent error messages from blocking the job process, you can add the following environment variable to the Execution settings in the job definition in Ansys HPC Manager:

Ansys ModelCenter

ModelCenter Remote Execution is not supported on Linux. Support will be added in a future release. (812043)

Ansys Rocky

When using an instance type with GPUs, only GRID drivers are recommended for accelerated graphics. For GPU compute, both GRID and Tesla drivers are suitable.

Ansys Speos

Submitting a job from a Slurm Controller machine to a Speos HPC cluster with multiple GPU instances will fail if Intel MPI 2021.6 is being used. In this scenario you must use Intel MPI 2018.3.222. (866096)

Tags

Creating tags with any of the following names will cause resources to be in a 'Failed State' upon creation: BackendIdentifier, Name, ProjectSpaceId, Source, TenantId, UserId, VMid. Creating tags with these names will result in a 'Duplicate key tag specified' error as these tag names are reserved for system use.

General Usability

Internet Explorer is not supported.

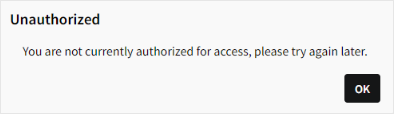

Occasionally, the following error may appear while you are working in Ansys Gateway powered by AWS:

Click OK to reload the page. In some cases, you may be prompted to sign in again. (912801)

If you perform an action in AWS portal which changes the status of a resource in AWS, the status is not automatically updated in Ansys Gateway powered by AWS.

Stopping a resource via AWS portal is a particular concern, as the resource will remain Running in Ansys Gateway powered by AWS and continue to incur charges on your Ansys bill.

To avoid issues, Ansys recommends that you always interact with resources via Ansys Gateway powered by AWS, not AWS portal. (1268368)