This section addresses issues that may occur in the RSM application.

Generating the RSM Service Startup Script for Linux

The script for manually starting the RSM launcher service is usually generated during installation. In the event that the script is not generated as part of the install, or if you have removed the generated script, you can generate the script manually by running the rsmconfig script with no command line options.

Alternatively you can run generate_service_script with the

-launcher command line option:

tools/linux> ./generate_service_script Usage: generate_service_script -launcher Options: -launcher: Generate RSM Launcher service script.

Configuring RSM for Mapped Drives and Network Shares for Windows

If RSM is used to solve local or remote jobs on mapped network drives, you may need to modify security settings to allow code to execute from those drives because code libraries may be copied to working directories within the project.

You can modify these security settings from the command line using the CasPol utility, located under the .NET Framework installation:

C:\Windows\Microsoft.NET\Framework64\v2.0.50727

In the example below, full trust is opened to files on a shared network drive to enable software to run from that share:

C:\Windows\Microsoft.NET\Framework64\v2.0.50727\CasPol.exe -q -machine -ag 1 -url "file://fileserver/sharename/*" FullTrust -name "Shared Drive Work Dir"

For more information on configuring RSM clients and cluster nodes using a network installation, refer to Network Installation and Product Configuration.

Firewall Issues

Error: 'LauncherService at machine:9242 not reached'

Ensure that the launcher service is running. For instructions refer to Installing and Configuring the RSM Launcher Service.

If you have a local firewall turned on, you need to add port 9242 to the Exceptions List for the launcher service (Ans.Rsm.Launcher.exe).

Allow a ping through the firewall (Echo Request - ICMPv4-In). Enable "File and Printer Sharing" in firewall rules.

User proxy ports:

A user proxy process is created for every user who submits a job to RSM. Each user proxy process will use a separate port chosen by RSM. By default, RSM will randomly select any port that is free. If you want to control which ports RSM can choose, ensure that a range of ports are available for this purpose, and specify the port range in the RSM application settings. See Specifying a Port Range for User Proxy Processes.

When the user proxy process is transferring files, a port is opened up for each file being transferred. If you want to control which ports RSM can choose, ensure that a range of ports are available for this purpose, and specify the port range in the RSM application settings. See Specifying a Port Range for User Proxy Socket File Transfers.

If submitting jobs to a multi-node Ansys RSM Cluster (ARC):

When a firewall is in place, traffic from the master node to the execution nodes (and vice versa) may be blocked. To resolve this issue, you must enable ports on cluster nodes to allow incoming traffic, and then tell each node what port to use when communicating with other nodes. For details see Dealing with a Firewall in a Multi-Node Ansys RSM Cluster (ARC).

Enabling or Disabling Microsoft User Account Control (UAC)

To enable or disable UAC:

Open Control Panel > User Accounts > Change User Account Control settings.

On the User Account Control settings dialog box, use the slider to specify your UAC settings:

Always Notify: UAC is fully enabled.

Never Notify: UAC is disabled.

Note: Disabling UAC can cause security issues, so check with your IT department before changing UAC settings.

Internet Protocol version 6 (IPv6) Issues

When localhost is specified as the Submit Host in an RSM

configuration, you may receive an error if the machine on which the configuration is being

used has not been configured correctly as localhost.

If you are not running a Microsoft HPC cluster, test the

localhost configuration by opening a command prompt and running the

command, ping localhost. If you get an error instead of the IP

address:

Open the C:\Windows\System32\drivers\etc\hosts file.

Verify that localhost is not commented out (with a # sign in front of the entry). If localhost is commented out, remove the # sign.

Comment out any IPv6 information that exists.

Save and close the file.

Note: If you are running on a Microsoft HPC cluster with Network Address Translation (NAT) enabled, Microsoft has confirmed this to be a NAT issues and is working on a resolution.

Multiple Network Interface Cards (NIC) Issues

When multiple NIC cards are used on a remote cluster submit host, additional configuration may be necessary to establish communication between the RSM client and the submit host. For instructions, refer to Configuring a Computer with Multiple Network Interface Cards (NICs).

RSH Protocol Not Supported

The RSH protocol is not officially supported and will be completely removed from future releases.

Job Submission Failing: Network Shares Not Supported

Users may encounter errors when submitting jobs to RSM using a network share from Windows. This applies to any cluster setup, including the Ansys RSM Cluster (ARC) setup, when a network share (UNC path or mapped drive) is used as a job's working directory.

Initially, the following error may be displayed in the RSM job report:

Job was not run on the cluster. Check the cluster logs and check if the

cluster is configured properly.

If you see this error, you will need to enable debug messages in the RSM job report in Workbench to get more details about the failed job. Look for an error similar to the following:

259 5/18/2017 3:10:52 PM '\\jsmithPC\John-Share\WB\InitVal_pending\UDP-2' 260 5/18/2017 3:10:52 PM CMD.EXE was started with the above path as the current directory. 261 5/18/2017 3:10:52 PM UNC paths are not supported. Defaulting to Windows directory. 262 5/18/2017 3:10:52 PM 'clusterjob.bat' is not recognized as an internal or external command, 263 5/18/2017 3:10:52 PM operable program or batch file.

Alternatively, for a Microsoft HPC cluster, you can gather diagnostic information by running the HPC Job Manager (supplied as part of the Microsoft HPC Pack), selecting the failed job, and examining the output section of the job’s tasks.

Solution: Modify the registry on Windows compute nodes to enable the execution of commands via UNC paths.

Create a text file of the following contents and save to a file (for example, commandpromptUNC.reg).

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\Software\Microsoft\Command Processor] "CompletionChar"=dword:00000009 "DefaultColor"=dword:00000000 "EnableExtensions"=dword:00000001 "DisableUNCCheck"=dword:00000001

Run the following command on all Windows compute nodes:

regedit -s commandpromptUNC.reg

For a Microsoft HPC cluster, the task of executing this on the compute nodes may be automated using the clusrun utility that is part of the Microsoft HPC Pack installation.

Error: 'Submit Failed' (Commands.xml not found)

If job submission fails and you see something similar to the following in the job log, this indicates that the commands.xml file was not transferred from the client to the cluster staging directory:

32 11/10/2017 9:07:46 AM Submit Failed 33 11/10/2017 9:07:46 AM C:\Users\atester\AppData\Local\Temp\RsmConfigTest\ibnjrsue.24u\commands.xml

This can occur if the file transfer method in the RSM configuration is set to No file transfer needed, which requires that client files be located in a shared file system that is visible to all cluster nodes.

To resolve this issue, choose one of the following options:

Change the file transfer method to RSM internal file transfer mechanism, and enter the path of the cluster staging directory.

OR

Ensure that the cluster staging directory is visible to client machines, and that client working directories are created within the shared file system.

For information on file management options, see Specifying File Management Properties.

Job Stuck on an Ansys RSM Cluster (ARC)

A job may get stuck in the Running or Submitted state if ARC services have crashed or have been restarted while the job was still running.

To resolve this issue:

First, try to cancel the job using the

arckill <jobId>command. See Cancelling a Job (arckill).If cancelling the job does not work, stop the ARC services, and then clear out the job database and load database files on the Master node and the node(s) assigned to the stuck job. Delete the backups of these databases as well.

On Windows, the database files are located in the %PROGRAMDATA%\Ansys\v242\ARC folder.

On Linux, the database files are located in the service user's home directory. For example, /home/rsmadmin/.ansys/v242/ARC.

Once the database files are deleted, restart the ARC services. The databases will be recreated automatically.

Tip: Clearing out the databases will fix almost any issue that you encounter with an Ansys RSM Cluster. It is the equivalent of a reinstall.

Error Starting Job on Windows-Based Multi-Node Ansys RSM Cluster (ARC)

When starting a job on a multi-node Ansys RSM Cluster (ARC) that is running on Windows, you may see the following error in the RSM job report:

Job was not run on the cluster. Check the cluster logs and check if the cluster is configured properly.

Use the arcstatus command to view any errors related to the job (or check the ArcNode log). You may see an error similar to the following:

2017-12-02 12:04:29 [WARN] System.ComponentModel.Win32Exception: The directory name is invalid ["\\MachineName\RSM_temp\tkdqfuro.4ef\clusterjob.bat"] (CreateProcessAsUser)

This is likely due to a permissions restriction on the share that is displayed.

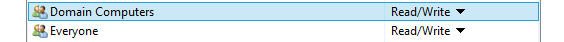

To resolve this issue you may need to open the network share of the cluster staging

directory (\\MachineName\RSM_temp in the example) and grant

Read/Write permissions on one of the following accounts:

Error Starting RSM Job: Mutex /tmp/UserProxyLauncherLock.lock Issues

When submitting a job to RSM, you may get an error similar to the following:

2017-10-05 20:10:13 [WARN] Mutex /tmp/UserProxyLauncherLock.lock is taking a long time to obtain, but the process that is using the mutex is still running ansarclnx1:::94255:::Ans.Rsm.Launcher. PID 94255

To resolve this issue:

Stop the RSM launcher service.

Remove /tmp/UserProxyLauncherLock.lock.

Restart RSM.

RSM Error: User Proxy Timeout

If an administrator has configured a feature like "hidepid" on Linux, so that users cannot see each other processes, the user proxy could time out.

If you run into this issue, consult your system administrator.

At a minimum, an administrator should check to make sure that the RSM service user (for

example, rsmadmin) can see user proxy processes spawned for other

users.

Error: Exception downloading file: No connection could be made because the target machine actively refused it

In some cases, when result files are being downloaded from a cluster, some files do not get downloaded.

If this occurs, we recommend increasing the value of the User Proxy timeout setting to more than 10 minutes.

You will need to edit the following setting in the Ans.Rsm.AppSettings.config file:

<add key="IdleTimeoutMinutes" value="10" />

This setting appears twice in the configuration file. We recommend changing it in both places.

For more information see Editing RSM Application Settings (rsm.exe | rsmutils appsettings).

Linux Threading Limit

By default, many Linux systems limit the total number of threads each user can run at

once, to avoid multiple processes overloading the system. If you are running many Ansys

jobs concurrently as the same user (for example, submitting them through RSM), you may

hit this limit, producing errors such as Thread creation failed.

To view the current thread limit, run the following command:

ulimit -u(bash)limit maxproc(csh)

To increase the thread limit, run the following command:

ulimit -u [limit](bash)limit maxproc [limit](csh)

Where [limit] is a numerical value (for example, 4096).

To view the administrator-imposed hard limit, run the following command:

ulimit -H -u(bash)limit -H maxproc(csh)

You cannot increase the limit amount beyond this hard limit value. Contact your system administrator to increase the hard limit.

Design Point Update Subtask Error When Using Altair Grid Engine (UGE) Scheduler

When submitting design point updates to Remote Solve Manager, the design points are allocated to one or more tasks and these tasks are allocated to one or more RSM jobs. This method of job submission can create an error similar to the following when using a UGE scheduler queue:

executing task of job 3119 failed: execution daemon on host

"testhost.domain.com" didn't accept task

To solve this issue, open the sge_pe file on the cluster and change

the daemon_forks_slaves parameter to

FALSE.

HDF5 File Locking

The HDF5 version used in 2024 R2 will result in failure when the

underlying file system does not support file locking, or where locks have been disabled. To

disable all file locking operations, create an environment variable named

HDF5_USE_FILE_LOCKING and set the value to the five-character string

FALSE.

SCP File Transfers are Slow

The Ethernet connection speed has an impact on the SCP file transfer speed. When the Ethernet connection speed is slow, you can improve the file transfer speed by enabling file archiving. This reduces the number and size of files being transferred.

Archiving is also effective when IT has restrictions in place to limit the number of SCP calls that can be made at one time. This can become an issue if multiple jobs are run simultaneously, for example.

To enable archiving, set the following environment variable on the client side: RSM_SCP_ARCHIVE=1.