For more information, see the following sections:

One option of using PBS Professional with Fluent is to use the

PBS Professional launching capability integrated directly into Fluent. In this mode,

Fluent is started from the command line with the additional

-scheduler=pbs argument. Fluent then takes responsibility for

relaunching itself under PBS Professional. This has the following advantages:

The command line usage is very similar to the non-RMS (resource management system) usage.

You do not need to write a separate script.

The integrated PBS Professional capability is intended to simplify usage for the most

common situations. If you desire more control over the PBS Professional

qsub options for more complex situations or systems (or if you

are using an older version of Fluent), you can always write or adapt a PBS Professional

script that starts Fluent in the desired manner (see Using Your Own Supplied Job Script and Using Altair’s Sample Script for

details).

Information on usage is provided in the following sections:

The integrated PBS Professional capability can be activated by simply adding the

-scheduler=pbs option when launching Fluent from the command

line:

fluent <solver_version> [<Fluent_options>] -i <journal_file> -scheduler=pbs

[-scheduler_list_queues] [-scheduler_queue=<queue>] [-scheduler_opt=<opt>]

[-gui_machine=<hostname>] [-scheduler_nodeonly]

[-scheduler_tight_coupling] [-scheduler_headnode=]

[-scheduler_workdir=<working-directory>] [-scheduler_stderr=<err-file>]

[-scheduler_stdout=<out-file>]

where

fluentis the command that launches Fluent.<solver_version> specifies the dimensionality of the problem and the precision of the Fluent calculation (for example,

3d,2ddp).<Fluent_options> can be added to specify the startup option(s) for Fluent, including the options for running Fluent in parallel. For more information, see the Fluent User's Guide.

-i<journal_file> reads the specified journal file(s).-scheduler=pbsis added to the Fluent command to specify that you are running under PBS Professional.-scheduler_list_queueslists all available queues. Note that Fluent will not launch when this option is used.-scheduler_queue=<queue> sets the queue to <queue>.-scheduler_opt=<opt> enables an additional option<opt>that is relevant for PBS Professional; see the PBS Professional documentation for details. Note that you can include multiple instances of this option when you want to use more than one scheduler option.-gui_machine=<hostname> specifies that Cortex is run on a machine named <hostname> rather than automatically on the same machine as that used for compute node 0. If you just include-gui_machine(without=<hostname>), Cortex is run on the same machine used to submit thefluentcommand. This option may be necessary to avoid poor graphics performance when running Fluent under PBS Professional.-scheduler_nodeonlyallows you to specify that Cortex and host processes are launched before the job submission and that only the parallel node processes are submitted to the scheduler.-scheduler_headnode=<head-node> allows you to specify that the scheduler job submission machine is <head-node> (the default islocalhost).-scheduler_workdir=<working-directory> sets the working directory for the scheduler job, so that scheduler output is written to a directory of your choice (<working-directory>) rather than the home directory or the directory used to launch Fluent.-scheduler_stderr=<err-file> sets the name / directory of the scheduler standard error file to <err-file>; by default it is saved asfluent.<PID>.ein the working directory, where<PID>is the process ID of the top-level Fluent startup script.-scheduler_stdout=<out-file> sets the name / directory of the scheduler standard output file to <out-file>; by default it is saved asfluent.<PID>.oin the working directory, where<PID>is the process ID of the top-level Fluent startup script.-scheduler_tight_couplingenables tight integration between PBS Professional and the MPI. It is supported with Intel MPI (the default), but it will not be used if the Cortex process is launched after the job submission (which is the default when not using-scheduler_nodeonly) and is run outside of the scheduler environment by using the-gui_machineor-gui_machine=<hostname> option.

This syntax will start the Fluent job under PBS Professional using the

qsub command in a batch manner. When resources are available, PBS

Professional will start the job and return a job ID, usually in the form of

<job_ID>.<hostname>. This job ID can then be used to

query, control, or stop the job using standard PBS Professional commands, such as

qstat or qdel. The job will be run out

of the current working directory.

Important: You must have the DISPLAY environment variable properly

defined, otherwise the graphical user interface (GUI) will not operate correctly.

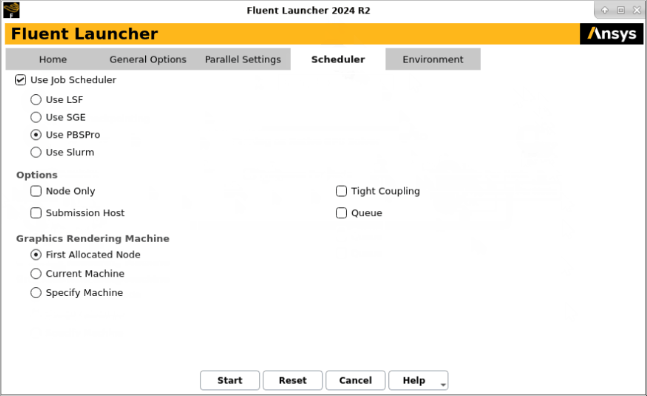

Fluent Launcher has graphical user input options that allow you to submit a Fluent job using PBS Professional. Perform the following steps:

Open Fluent Launcher (Figure 2.1: The Scheduler Tab of Fluent Launcher (Linux Version)) by entering

fluentwithout any arguments in the Linux command line.Select the Scheduler tab.

Enable the Use Job Scheduler option.

Select Use PBSPro.

You can choose to make a selection from the PBS Submission Host drop-down list to specify the PBS Pro submission host name for submitting the job, if the machine you are using to run the launcher cannot submit jobs to PBS Pro.

You can enable the following options under Options:

Enable the Node Only option to specify that Cortex and host processes are launched before the job submission and that only the parallel node processes are submitted to the scheduler.

Enable the Submission Host option and make a selection from the drop-down list to specify the PBS Pro submission host name for submitting the job, if the machine you are using to run the launcher cannot submit jobs to PBS Pro.

Enable the Tight Coupling option to enable a job-scheduler-supported native remote node access mechanism in Linux. This tight integration is supported with Intel MPI (the default).

Enable the Queue option and make a selection from the drop-down list to specify the queue name.

If you experience poor graphics performance when using PBS Professional, you may be able to improve performance by changing the machine on which Cortex (the process that manages the graphical user interface and graphics) is running. The Graphics Rendering Machine list provides the following options:

Select First Allocated Node if you want Cortex to run on the same machine as that used for compute node 0. Note that this is not available if you have enabled the Node Only option.

Select Current Machine if you want Cortex to run on the same machine used to start Fluent Launcher.

Select Specify Machine if you want Cortex to run on a specified machine, which you select from the drop-down list below.

Note that if you enable the Tight Coupling option, it will not be used if the Cortex process is launched after the job submission (which is the default when not using the Node Only option) and is run outside of the scheduler environment by using Current Machine or Specify Machine.

Set up the other aspects of your Fluent simulation using the Fluent Launcher GUI items. For more information, see the Fluent User's Guide.

Important: You must have the DISPLAY environment variable properly

defined, otherwise the graphical user interface (GUI) will not operate correctly.

Note: Submitting your Fluent job from the command line provides some options that are not available in Fluent Launcher, such as specifying the scheduler job submission machine name or setting the scheduler standard error file or standard output file. For details, see Submitting a Fluent Job from the Command Line.

Submit a parallel, 4-process job using a journal file

fl5s3.jou:

> fluent 3d -t4 -i fl5s3.jou -scheduler=pbs Relaunching fluent under PBSPro 134.les29

In the previous example, note that 134.les29 is returned from qsub. 134 is the job ID, while les29 is the hostname

on which the job was started.

Check the status of the job:

> qstat -s

les29:

Req’d Req’d Elap

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time

------------- -------- -------- --------- ------ --- --- ------ ----- - -----

134.les29 user1 workq fluent 11958 4 4 -- -- R 00:00

Job run at Thu Jan 04 at 14:48

> qstat -f 134

Job Id: 134.les29

Job_Name = fluent

Job_Owner = user1@les29

<... additional status of jobID 134>

The first command in the previous example lists all of the jobs in the queue. The second command lists the detailed status about the given job.

After the job is complete, the job will no longer show up in the output

of the qstat command. The results of the run will then be available

in the scheduler standard output file.

The integrated PBS Professional capability in Fluent 2024 R2 has the following limitations:

The PBS Professional commands (such as

qsub) must be in the users path.For parallel jobs, Fluent processes are placed on available compute resources using the

qsuboptions-l select=<N>. If you desire more sophisticated placement, you may write separate PBS Professional scripts as described in Using Your Own Supplied Job Script.RMS-specific checkpointing is not available. PBS Professional only supports checkpointing via mechanisms that are specific to the operating system on SGI systems. The integrated PBS Professional capability is based on saving the process state, and is not based on the standard application restart files (for example, Fluent case and data files) on which the LSF and SGE checkpointing is based. Thus, if you need to checkpoint, you should checkpoint your jobs by periodically saving the Fluent data file via the journal file.