Troubleshooting Slurm Autoscaling Cluster Workflows (Non-HPS)

The following topics describe common issues that may occur when interacting with a Slurm autoscaling cluster, and how to resolve them:

See also:

Unable to run sbatch or squeue commands on cluster head node

If you have connected to the cluster head node and cannot run the sbatch or squeue command successfully, try the following:

- Ensure that the head node is running.

- Wait for 5-10 minutes and try again.

If the issue persists, run the following commands:

sudo systemctl restart slurmctldsudo systemctl restart munge

Unable to run sbatch or squeue commands on Slurm client VM

If you have connected to a Linux virtual desktop and cannot run the sbatch or squeue command successfully, try the following:

Ensure that the virtual desktop and autoscaling cluster are in the same project space and are both in the Running state.

Follow the instructions in Restoring a Connection to a Slurm Autoscaling Cluster.

Job submitted to Slurm cluster appears to be stuck or still pending

Try the following:

- Check the status of the cluster nodes in the cluster details. See Viewing Autoscaling Cluster Details in the User's Guide.

From the Slurm client VM or cluster head node, run the sinfo and squeue commands.

If the status is 'PD', this means that the job is pending for reasons listed in the squeue command output.

The most likely reason is that the requested resources are not available, either because all available nodes are busy or your request exceeds the resources available within the queue definition.

In this case, kill the job with scancel <jobID> and review your job inputs.

If the status is 'CF', this means that the job was submitted but that the requested compute nodes are being configured. If nodes need to be spun up, this can take 5-15 minutes provided that there is quota and capacity for the specified virtual machine size in that Azure region. The system will try to provision resources for a job and wait for about 30 minutes. It will then resubmit the request to provision resources. This could happen indefinitely until you cancel the job.

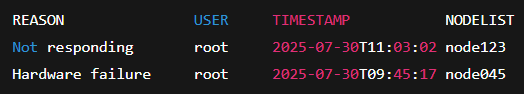

To display a list of nodes in the DOWN, DRAIN, or FAIL state, and the reason they are in that state, run sinfo - R. Output example:

Table 1. Slurm nodes states for problematic nodes State Description Common Reasons DOWN The node is not currently usable by the scheduler. - The node is unreachable. This means that Slurm cannot

communicate with

slurmd(the Slurm Daemon) on that node. - The node has hardware or software failures.

- The node failed health checks or boot procedures.

DRAIN The node has been intentionally marked as unavailable for scheduling jobs. - Scheduled maintenance

- Troubleshooting

FAIL The node has encountered a critical error and is not functioning properly. - Hardware failure (for example, memory, disk, CPU issues)

- The

slurmddaemon on the node has crashed or failed to start - Network connectivity problems

- Misconfigured node settings

- Node rebooted unexpectedly or is unreachable

- The node is unreachable. This means that Slurm cannot

communicate with