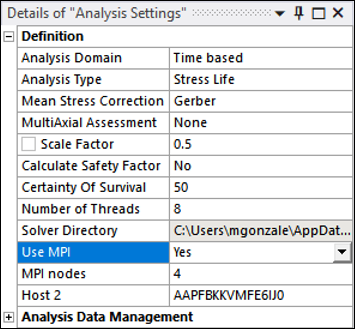

By default, MPI is not used. If you want to use MPI capabilities, set the Use MPI option within Analysis Settings to (see below).

Set the number of total nodes to use and the Host 2 on which to run the job. The job will be run using MPI by running the following batch line:

mpiexec -n 1 -host mpihost dtproc_exe dcl_filename -m : -host mpihost2 -n

mpinodes dthost2_exe

where:

mpihostis the machine you are triggering the job from, which will run 1 thread.mpihost2is the machine you are triggering the job to, which by default will be your local machine.mpinodesis the number of nodes that will run on the second machine. This is the total number of nodes minus 1, as 1 of the nodes is on the main machine.dtproc_exeanddthost2_exeare the nCode executables used to run the distributed jobs, located within the nCode installation directory.dcl_filenameis the input.dcl that will run.

Each process will use a number of threads equal to the number of cores on the computer it is running on (as is the case for a non-distributed job run).

The distributed license feature is called DTLib_Distributed, which is in a CDS package named CAEDistributed. If this license is not available, then the job continues but will not be distributed. In this case, all nodes are instructed to exit and a message is written to the log (at info level) to record the reason for not running distributed.

Note that:

Nodes do not use any licenses except for thread usage.

Nodes use thread licenses for all threads that they use. There are no "free" threads.

The main node uses the "free" threads.

The working directory should be located on a mapped network drive that is accessible on both hosts through the same drive letter (for example, Z:\workdir and a shared folder \\server\SharedFolder have been mapped to Z:\ on both hosts).