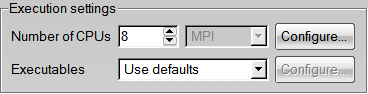

When MPI is properly configured, launching a multi-CPU run with FENSAP-ICE is straightforward; simply select the number of CPUs in the Execution setting section of the Start panel.

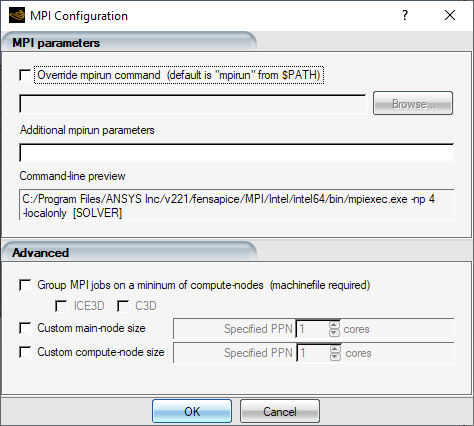

More flexibility in configuring the execution environment is supported by FENSAP-ICE. Clicking the Configure button shown above in the Execution settings box opens the following window:

The usage of the parameters that can be provided to mpirun is

shown below.

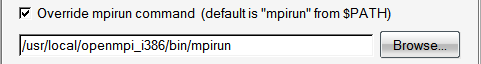

Select Override mpirun command (default is "mpirun" from

$PATH) if the mpirun to be used is not the default mpirun from

the $PATH

Additional parameters can be provided to mpirun. For example, the following option overrides the default settings and permits the usage of a customized list of machines:

-machinefile /path/to/machinefile

In the PBS queuing system, the following option is often required:

-machinefile $PBS_NODEFILE

The mpirun version override and its parameters can be configured with default values by editing the $NTI_PATH/../config/mpi.txt file. See config/mpi.txt.

The –machinefile option permits the selection of a

customized list of machines for the execution. The file contains a simple list of

machines names on the network (text file). The machines must:

Share the execution directory via NFS, with identical path on all of them.

Share access to the same

$NTI_PATHdirectory.Have the MPI library installed at the same location.

(Linux) Be able to communicate with each other using ssh, without password.

Be able to connect with each other using TCP (firewalls must allow the connection).

The machine running the main process must be able to access the software licenses.

The machinefile can be tested with the $NTI_PATH/test_mpi

command, following the procedure outlined in the previous chapter.

When using mpirun on the command line, simply add –machinefile

filename to the arguments. The filename argument must have either an

absolute or relative path. For example, to use the file:

/home/user/machinefile16

Residing in your home directory, the –machinefile

option is:

mpirun –np 16 –machinefile /home/user/machinefile16

$NTI_PATH/test_mpi

The machinefile relative filename can be used when executing in a project subdirectory:

mpirun –np 16 –machinefile ../machinefile16

$NTI_PATH/fensapMPI

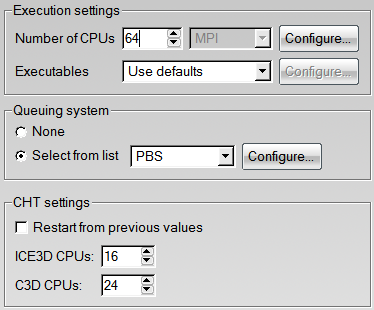

Runs containing different solvers might have different number of CPUs assigned to them. For example, in the context of a CHT3D (FENSAP) run, a 64 CPU execution could be configured with 24 CPUs for C3D, and 16 CPUs for ICE3D:

The default behavior of most mpirun implementations is to use the machinefiles as-is, in the order in which the machines are listed. However, this might have the effect of scattering the execution CPUs over la large number of separate machines.

For example, if a machinefile such as m1,m2,m3,m4,m5,m5,m5,m5 is used for 4 CPUs, the execution would be scattered in m1, m2, m3, m4.