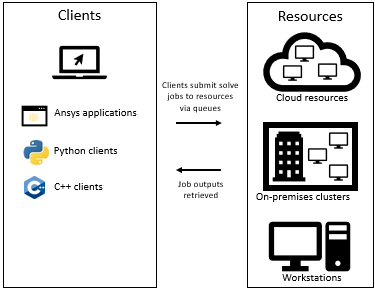

Traditional HPC workflows have an agentless architecture whereby users push work to a resource that does the work. For example, an Ansys Mechanical user might submit a solve job to a Remote Solver Manager queue which has specific compute resources associated with it.

In this scenario, the user is required to have knowledge of their organization's infrastructure and decide which queues or machines to use for job submission. Also, this somewhat implies that only one machine or resource manager (orchestrator) can be used at one time. It also means that the task to be run must be defined up front, resulting in a 1-1 mapping between tasks and scheduler jobs.

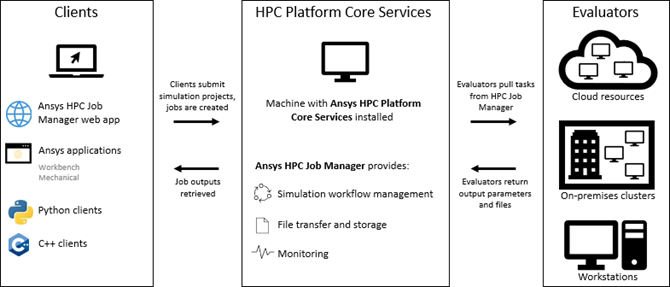

By comparison, the Ansys HPC Platform Services system has a pull architecture whereby users add projects to a datastore and workers (agents) pull tasks from the datastore which they are capable of executing. Central to this orchestration are the Ansys HPC Platform Core Services which are delivered via the Ansys HPC Job Manager.

The key advantage of this architecture is that the user does not need to know anything about the infrastructure or queues, or make decisions on which resources to use (although the option of making specific choices does exist). It has no implicit link to a single machine or resource manager (orchestrator) and can have several agents connected at one time — any of which could be suitable candidates to pick up the work.