Configuring a Parallel HFSS Regions Simulation in HFSS 3D Layout

From the SIwave sweep setup, complete these steps to configure a parallel solve.

- Configure a distributed solution setup.

- Set up an SIwave regions simulation.

- From the Edit Frequency Sweep window, click HFSS (user-defined regions) and Solve regions in parallel.

Note:

If the Solve regions in parallel option is unavailable, ensure that Generate regions schematic is deselected. These options are mutually exclusive.

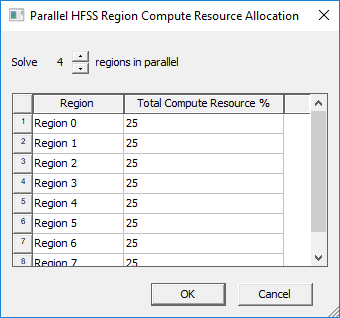

- Click Configure to open the Parallel HFSS Region Compute Resource Allocation window.

- Specify the number of regions to solve in parallel, and allocate a percentage of total resources toward each region. See the following example.

The percentage values are converted to specific machine names, CPU counts and memory footprints during simulation setup.

- Click OK to return to the Edit Frequency Sweep window.

- Click OK to close the setup.

Example

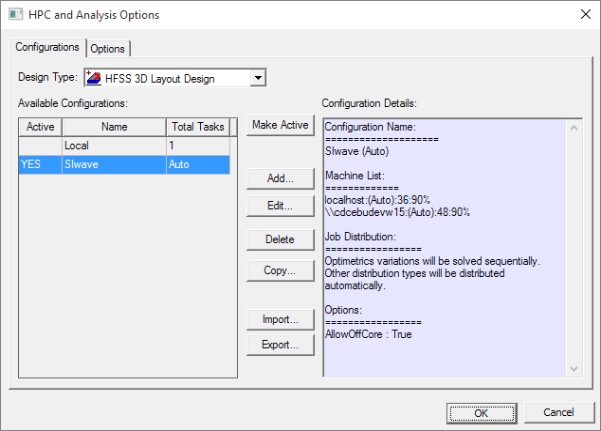

Suppose localhost has been configured to use 36 cores and 90% of available memory, and cdcebudevw15 has been configured to use 48 cores and 90% of available memory:

In total, there are 84 cores at our disposal, so each region configured to use 25% of total available resources gets 21 cores.

Memory is divided among the regions using the following formula:

HFSS region memory % = (% memory to use on machine) X (#cores to use for region)/(#cores available on machine)

Cores are allotted to regions starting with the first machine in the distributed solve setup (localhost, in this example). Once all cores on that machine have been exhausted, cores are pulled on the next machine in the list (cdcebudevw15, in this example). Once all cores on all machines have been exhausted, the solver loops back to the first machine and starts again.

To avoid resource contention, you must balance compute resource percentage assignments for each region with the number of regions being solved in parallel. Working through this core/memory assignment logic,ending up with the following:

- Region 0 – are allotted 21 cores from localhost; % memory = 90% X (21/36) =52.5%

This leaves 15 remaining cores on localhost and 48 on cdcebudevw15.

- Region 1 – are allotted 15 cores from localhost and 6 cores from cdcebudevw15 (21 total cores)

This leaves 0 remaining cores on localhost and 42 remaining cores on cdcebudevw15.

% memory on localhost = 90% * (15/36) = 37.5%

% memory on cdcebudevw15 = 90% * (6/48) = 11.25%

- Region 2 – are allotted 21 cores from cdcebudevw15; % memory = 90% X (21/48) = 39.375%

This leaves 21 remaining cores on cdcebudevw15.

- Region 3 – are allotted 21 cores from cdcebudevw15; % memory = 90% X (21/48) = 39.375%

All cores on all machines have been exhausted at this point, so the solver goes back to the first machine in the distributed solve setup list...

- Region 4 – are allotted 21 cores from localhost; % memory = 90% X (21/36) =52.5%