The Data Processing Framework (DPF) is designed to provide numerical simulation users/engineers with a toolbox for accessing and transforming simulation data. DPF can access data from solver result files as well as several neutral formats (csv, hdf5, vtk, etc.). Various operators are available enabling the manipulation and the transformation of this data. DPF is a workflow-based framework that enables simple as well as complex evaluations by chaining operators. The data in DPF is defined based on physics agnostic mathematical quantities described in a self-sufficient entity called field. This allows DPF to be a modular and easy to use tool with a large range of capabilities. It is a product designed to handle large amounts of data.

In summary, DPF can be explained through the following concepts:

Operator: Is the only object used to create and transform the data. It is composed of a “core” (which will operate the calculation part), along with input and output “pins.” Those pins allow the user to provide input data to each operator. When the operator is evaluated, it will process the input information to compute its output ones. Operators can be chained together to create workflows.

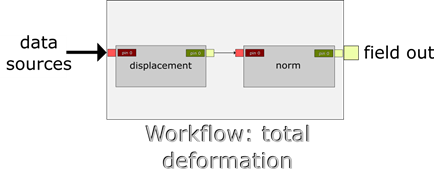

Workflow: Is the global entity that you will use. Built by chaining operators, it will evaluate the data processing defined by the used operators. It needs input information, and it will compute the requested output information. See workflows' examples below.

Data Sources: Are one or more files in which analysis results can be found.

Field: Is the main simulation data container. In numerical simulations, results data are defined by values associated with entities (scoping), and these entities are a subset of a model (support). In DPF, field data is always associated to its scoping and support, making the field a self-describing piece of data.

For example: For a Field of a nodal displacement, the displacements are the simulation data, the associated nodes are the scoping. The field also contains homogeneity and the unit of the data to complete the data definition to have it self-described.

Those are the four main data types. For any data processing, the workflow can be compared with a black box (illustrated below) in which some operators are chained, computing the information it is made for.

Other Data Types

Additional data types include:

Fields container: A fields container is a container of fields. It is used for example in transient/harmonic/modal or multi - steps static analysis,

Support: Is the physical entity to which the field is associated. For example, it can be a mesh, a geometrical entity, time, or frequency values.

Scoping: Is a subset of the model's support. Typically, scoping can represent nodes ids, element ids, time steps, frequencies, joints, etc. Therefore, the scoping can describe a spatial subset and / or a temporal one on which a Field is scoped. It is a part of the support.

Location

Location is the type of topology associated to the data container. DPF uses, for example, three different spatial locations, that are Nodal, Elemental, and ElementalNodal for finite element data. The Nodal type (resp. Elemental) describes data computed on the Nodes (resp. on the Element itself). These nodes and elements are identified by an Id – typically a node or element number. An ElementalNodal location describes a data defined on the Nodes of the elements, but you will need to use the Element Id to get it. Thus, you can define Elemental or Nodal scoping.

You can find the PDF-based documentation following the process presented below, using the Scripting option on the Automation tab.

The documentation will be generated at the requested location:

import mech_dpf import Ans.DataProcessing as dpf doc_op = dpf.operators.utility.html_doc() doc_op.inputs.output_path.Connect(r'MY_FILE_PATH\MY_FILE_NAME.html') doc_op.Run()